Driver aids have been around for a long time. Designed to make the task of driving easier, automakers have steadily introduced convenience features with various levels of success. The feature we all know as “cruise control” was invented in the 1940s, and appeared first in Chrysler automobiles in 1958. By 1960, it was standard equipment in all Cadillacs.

Fast forward to the modern era and you’ll find more robust versions of that original speed control system. Radar-guided cruise control is available in many cars, allowing the driver to set the desired speed, and to choose a “following distance” – the distance they want the system to maintain between their car and the car ahead. Automatic braking, lane departure warnings, and all manner of computer-controlled equipment combine to make driving easier and safer.

The Rise of Semi-Autonomy: “No, We Aren’t There Yet”

It’s only natural that these exponential advances in automobile technology have led to the idea of autonomous driving. According to techopedia.com, an autonomous car is a car that can guide itself without human conduction. Here’s the issue: as of December 2019, no such cars are available for purchase. Massive amounts of money are being invested in the technology by companies like Alphabet and its Waymo unit, Apple, GM, and Tesla, but true autonomous driving is not available.

Simply put, we’re not there. Yet.

That does not keep companies from adding semi-autonomous features to road-going products. General Motors offers SuperCruise in its Cadillac products, and Tesla offers the Autopilot system in all of its cars that are ordered online. Semi-autonomy, however, comes in forms as different as its makers – with surprising results.

The Many Roads to Autonomy, and the Dangers Along the Way

The different companies offering semi-autonomous features arrive at their results in very different ways. This leads to confusion about which systems do what. The National Highway Traffic Safety Administration (NHTSA) has defined its own . Emerging technology often has competing definitions as companies seek to brand their semi-autonomous driving systems, and the methods for evaluating the technologies are in development as well. This lack of clarity becomes a safety issue at times.

The easiest example to cite is that of Tesla’s “Autopilot” system, though they are not alone when it comes to safety issues, confusing terminology or accidents involving their products.

Tesla and Autopilot – Problems by Design?

Tesla is as much a technology firm as an automaker, and as such, they collect and use massive amounts of data. The company tracks their cars and releases a quarterly vehicle safety report, which contains data on accidents involving their vehicles. Specifically, it covers accidents involving their Autopilot system. In Q3 of 2019, the company registered one accident for every 4.34 million miles of driving with Autopilot engaged, versus one accident for every 2.7 million miles without it. Both numbers include the other active safety features of the cars in use.

In this case, the numbers are impressive, but may hide a problem. It starts with how the Autopilot requires driver input, how it differs from other systems on the market, and how the company markets it.

Driver Involvement

Tesla specifically instructs drivers to maintain control of their vehicles, even when autopilot is engaged. The company uses steering wheel input to monitor driver engagement, not simply contact with the steering wheel, as is widely reported. That system, however, is more simplistic than competitors who use cameras to monitor drivers’ eyes and focus.

Road-Sensing Systems

Tesla relies on radar and a camera to sense road conditions. Other makers, including Ford, Waymo and others, use something called LIDAR. The difference in sensors is significant. Tesla’s system uses radar, the camera, and computer software to detect hazards. A road sign and a guard rail require different responses than a car in the lane ahead. But radar can be fooled, as has apparently happened in crashes over the last few years in Connecticut and Florida. In these and other cases, the system has failed to react to other vehicles blocking the road. And, in the Connecticut case, the driver was not even watching the road, but had turned to attend to a dog in the back seat. Also worth noting is that Tesla uses a single forward-facing camera. Stereo cameras would have detected the difference in at least one case, and have been tested to be nearly as accurate as the more expensive LIDAR sensors by researchers at Cornell University for much lower cost.

Marketing

Tesla does seem to contradict itself in how it markets its system versus how it instructs users to behave with it. On one hand, the system is actually called “Autopilot,” which implies that the piloting of the vehicle is automatic, and Tesla claims the vehicles have all the equipment necessary for future autonomous driving. Tesla founder Elon Musk even famously misused the system in a demonstration, and people may be more apt to copy the man who owns the company than to read pages of instructions. On the other hand, the company makes a point of explaining that the system requires driver attention. Even so, there are many owners who have thought up “hacks” to defeat the monitoring method that the car uses to prompt them to pay attention.

When It All Goes Wrong

Even with millions of miles of pre-market testing, the real world with real owners is a different animal for autonomous driving systems. How a company claims a product works may not be accurate to how users employ it, and even the most tested technology is sometimes flawed.

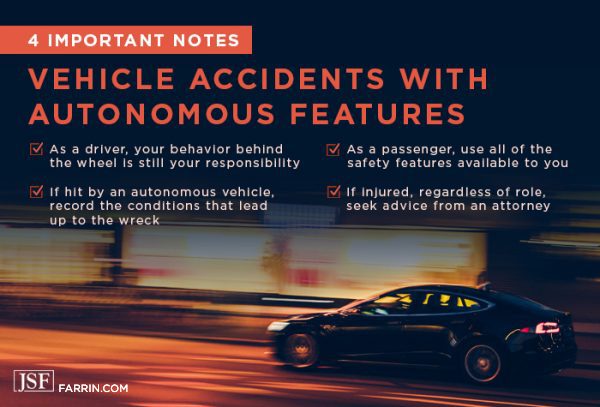

If you or someone you love is involved in an accident with a vehicle with autonomous driving features, here are some things to note.

- If you are driving, your behavior behind the wheel is still your responsibility. These vehicles are packed with sensors. By definition, the car is watching you. Regardless of what you see online or how much confidence the system instills, use these systems in accordance with the owners’ manual. If an accident still occurs, you want to eliminate yourself as a possible cause, and therefore, a source of liability.

- If you are a passenger, make sure to use all of the safety features available to you. If the driver wants to show you the autonomous driving features, ask that they be employed responsibly. Preventing a dangerous situation is preferable to a lawsuit or a life-changing injury.

- If you are struck by an autonomous vehicle, try to record the conditions that led up to the accident. It may be important later on for your potential claims, depending on how the vehicle behaved during the accident.

- If you are injured, regardless of your role, it may be wise to seek the advice of an experienced personal injury attorney as soon as you’re able. Because of the sense of confidence these autonomous driving systems create, it is easy for drivers to become careless.

Seek Representation From an Experienced Car Accident Attorney

We pride ourselves on fighting for victims, and working to try to ensure they receive the compensation they deserve. We’re not afraid to fight the big cases.

You May Also Be Interested In

Answers to the Most Frequent and Urgent Car Accident Injury Questions

New Technology, New Laws: 6 Need-to-Know Legal Facts about Self-Driving Cars in North Carolina